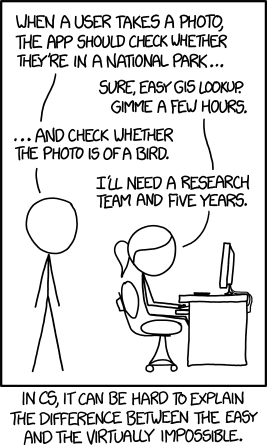

XKCD, a webcomic about geeks and geek-related topics, once had a cartoon about “the difference between the easy and the virtually impossible” in computer science:

This alludes to the “hard problems” of artificial intelligence (AI). Although computers can churn through massive number-crunching tasks, like solving equations or playing chess, they’ve struggled with understanding a question in ordinary language, or describing a scene in an image—things that even a child can do.

But that was then. This is now.

This cartoon was published in September 2014. In the eight years since then, the problem it poses has been solved.

An AI in your pocket

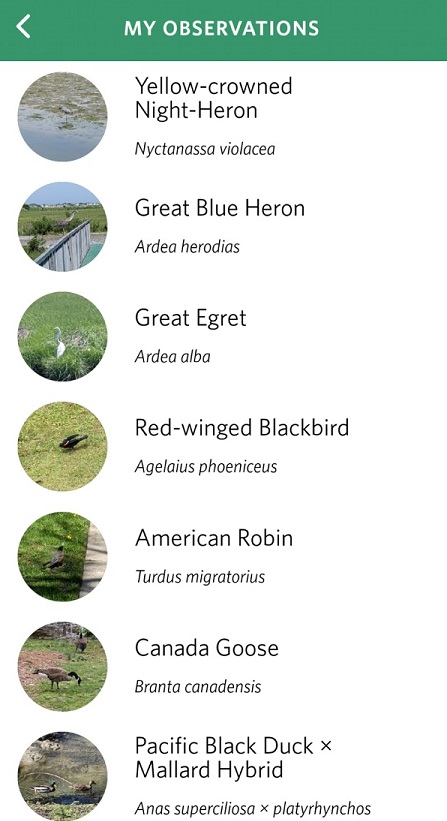

An ordinary smartphone can do it, thanks to an app called Seek by iNaturalist. Given a sharp, close-up image of a living thing, it not only can tell you whether it’s a bird (or a mammal, or a plant, or a fish, or whatever), it can identify the species. Here are some of the observations I’ve logged with mine:

This is an amazingly cool and useful tool for amateur naturalists. I use Seek habitually when I go for walks, snapping pictures of plants and animals so the app can tell me what they are. It’s improved my own taxonomic knowledge, as I’ve learned to identify some common species in my neighborhood from its results.

This powerful image-recognition capability has all sorts of uses. My smartphone has another built-in app, Google Lens, that’s like a visual search of the world. You can take a picture of something, and the app analyzes it and shows search results related to whatever it thinks the picture is of.

In 2021, my son came home from kindergarten with a goody bag from a birthday party. One of the treats in the bag was a round, flat snack that looked like a rice cake or a cracker. The package wasn’t labeled in English, and there was nothing resembling an ingredient list. Since he has food allergies, I had to find out what it was before I let him try it.

I took a picture of the unknown snack with Google Lens, and it immediately found a web page depicting the exact product, down to the brand. The page was in Chinese, but my browser (more AI wizardry!) auto-translated it to English. It was a seaweed-flavored senbei, a savory rice cracker that originated in Japan.

Artificial intelligence offers more than clever toys to assist amateur naturalists or reassurance for anxious parents. With strides in image processing, computers are moving into jobs that once seemed the sole domain of humans.

Computer vision is more than a toy

The obvious application of this technology is self-driving vehicles. We’re not at the point where a car can drive itself completely autonomously, with no human behind the wheel—but with the number of companies working on this technology, it would be foolish to assume we’ll never get there. It may not be long before we have robot taxis that can take you wherever you want to go, or autonomous trucks that can ship goods wherever they need to go with no need for breaks or sleep.

As another example, AI with computer vision can cut and sew fabric, allowing on-demand production of clothes that are custom-tailored to a person’s individual body size:

Then comes the patented machine vision system. ID Tech Ex says that it has higher accuracy than the human eye, “tracking exact needle placement to within half a millimeter of accuracy.” IEEE explains that it tracks each individual thread within the fabric. “To do that, [the company] developed a specialized camera capable of capturing more than 1,000 frames per second, and a set of image-processing algorithms to detect, on each frame, where the threads are.”

Using this high caliber machine vision and real-time analysis, the robotics then continually manipulate and adjust the fabric to be properly arranged. The Pick & Place machine mimics how a seamstress would move and handle fabric. “These micromanipulators, powered by precise linear actuators, can guide a piece of cloth through a sewing machine with submillimeter precision, correcting for distortions of the material,” says IEEE.

“SewBot Is Revolutionizing the Clothing Manufacturing Industry.” DevicePlus, 19 February 2018.

AI is changing the face of war. In its battle with Russia, Ukraine has used high-precision Brimstone missiles, which can choose their own targets within a designated strike area. They can distinguish a tank from a car or a bus. If a salvo of Brimstones are fired, they can communicate in midflight to divide up the targets amongst themselves.

AI is moving into medicine. Computer vision programs can analyze X-rays to diagnose cancer and other diseases, matching or even beating the performance of expert human radiologists.

In an especially shocking example of how good technology is becoming, we may soon have robot surgeons that can operate without human help. In one trial, the Smart Tissue Autonomous Robot (STAR for short) performed laparoscopic surgery on a pig, reconnecting a severed intestine more skillfully than a human surgeon:

“Our findings show that we can automate one of the most intricate and delicate tasks in surgery: the reconnection of two ends of an intestine. The STAR performed the procedure in four animals and it produced significantly better results than humans performing the same procedure,” said senior author Axel Krieger, an assistant professor of mechanical engineering at Johns Hopkins’ Whiting School of Engineering.

From diagnosis to treatment! It doesn’t take much imagination to see how a robot doctor-in-a-box could be lifesaving for scientists at an Antarctic research base—or astronauts on a voyage to Mars.

Passing the Turing test

Artificial intelligence is also getting incredibly good at natural language—both producing it and analyzing it. GPT-3, a language engine by the company OpenAI, is one of the best yet at this task. It can answer questions, respond to writing prompts, pen original poems, and even write working computer code. It can write a scientific paper about itself.

Granted, some of its responses have that slightly-incoherent quality of machine-generated text. But many are impressively coherent, even well-reasoned. Could it be that a machine that can pass the Turing Test is on the horizon?

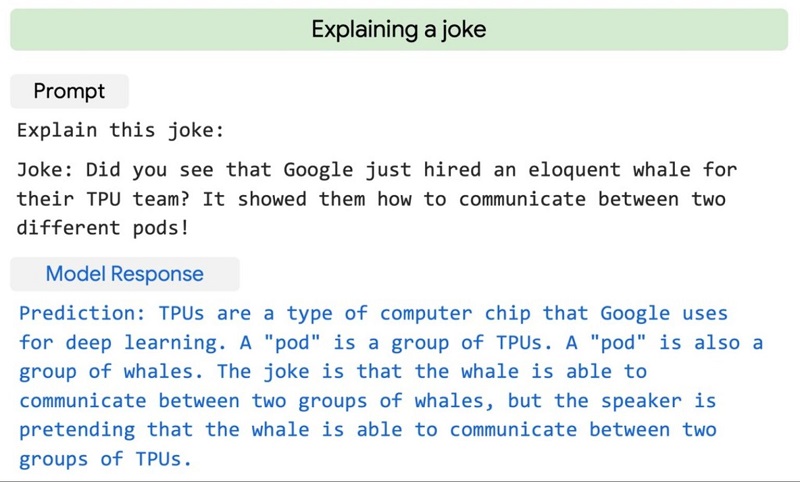

There’s also PaLM, short for Pathways Language Model, a natural-language AI developed by Google that can explain why a joke is funny:

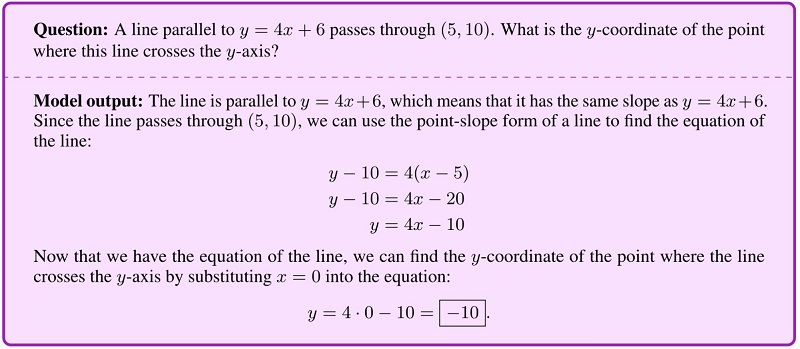

Another Google-designed system, Minerva, is able to solve math and logic problems posed in natural language:

An AI with imagination

The one that I find the most mind-blowing is DALL-E 2 by OpenAI. It makes original art. All you have to do is type out a description of what you want, and the program draws it for you. It can be photorealistic, cartoony, or any level of detail in between. It can mimic the style of any era, artist or art school.

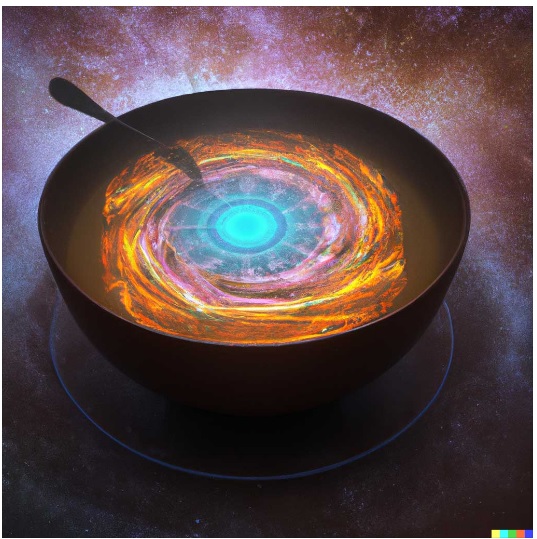

For example, here’s what DALL-E 2 generates for the prompt: “a bowl of soup that is a portal to another dimension, as digital art”:

DALL-E 2 isn’t a dumb automaton cutting and pasting existing images together into a collage. It’s creative, with a positively spooky ability to comprehend abstraction, to generalize, and to create variations on a theme. It has—dare I say it?—a vivid imagination.

Here are some more examples from the DALL-E 2 Reddit forum. Yes, every one of these was created by a computer program!

- “a wide-angle lens photo of a rapper in 1990 New York holding a kitten up to the camera“

- “a medieval painting of the wi-fi not working“

- “a 1960s yearbook photo with animals dressed as humans“

- “an ancient Egyptian painting depicting an argument over whose turn it is to take out the trash“

- “clay statues representing the seven deadly sins“

- “An IT-guy trying to fix hardware of a PC tower is being tangled by the PC cables like Laokoon. Marble, copy after Hellenistic original from ca. 200 BC. Found in the Baths of Trajan, 1506.”

- “Lord of the Rings from Sauron’s perspective“

- “Dystopian Great Wave off Kanagawa as Godzilla eating Tokyo“

- “Times Square in a bottle“

There are competitors to DALL-E 2 that do the same thing, like Midjourney, Dream or CrAIyon. Google’s version is called Imagen. Some of these are closed or invite-only, but others are open to the public—you can try them out yourself. They’ve even been used to generate header images right here on OnlySky.

(How on earth can a computer do this? Here’s a semi-technical explanation of the inner workings of DALL-E 2.)

What does the future hold for humans?

Obviously, the near-term implication of AI is economic disruption. An unexpectedly broad range of careers—from radiologist to garment worker to truck driver to graphic designer—could disappear if machines prove capable of doing them quicker or more efficiently than us.

Every technology makes old industries obsolete. In that sense, AI is no different. However, this could be the final frontier: machines are encroaching on the reasoning and creative work that seemed like the last redoubt of human uniqueness. It’s undoubtedly scary to contemplate a world where human beings are less necessary.

But for the same reason, AI can free us. It’s a giant step closer to the post-scarcity utopian future where work is optional. AI can take over mundane and repetitive tasks so that we have more free time and leisure. With that reclaimed time, art engines will blow open the frontiers of creativity. Imagine every person having the computing power to bring their most vivid imaginings to life.

(Also, engines like GPT-3 and DALL-E 2, impressive as they are, still benefit from training on human-created text and images—so there won’t cease to be a role for writers and artists.)

AI can work in tandem with humans, making us better, rather than superfluous. Apps like Seek are a cognitive prosthesis, augmenting our brains by giving us on-demand information about the world. Imagine an app that did the same thing with a picture of a politician’s face, showing you their voting record or history of public statements on whatever topic you care about. Imagine an augmented-reality overlay with scientific or historical details about every object in your field of view. Such innovations could be the next stage of the Flynn effect, making each of us into literal superhuman intelligences with the sum total of humanity’s knowledge and culture in our heads.