[Previous: AI is getting scarily good]

Artificial intelligence is getting scarily good at holding conversations. How will this new technology transform society—and what can it teach us about ourselves?

The artificial-intelligence company OpenAI has unleashed its newest prototype: ChatGPT, a program that understands natural language and responds in kind. You can sign up and try it out yourself (if the system isn’t overloaded with users already).

ChatGPT is undeniably impressive. It writes fluid, grammatical, coherent text. It can compose an essay on any topic in instants. It can generate TV scripts, travel itineraries, recipes, poems, songs, and computer code. It can tell jokes and translate between languages. It remembers context and can refer to previous questions and answers.

Sometimes it even seems to have a sense of humor. One of the funniest viral results was from a user who asked it to write advice in the style of the King James Bible about how to remove a peanut butter sandwich from a VCR. It can explain quantum physics in rap lyrics, or give a recipe for cheese sauce in pirate-speak.

Don’t pack your bags for the Singularity just yet

On the other hand, ChatGPT sometimes makes (what are, to a human being) dumb, obvious mistakes. It doesn’t know whether 10 kg of cotton is heavier than 10 kg of iron, or it thinks you can bike from San Francisco to Hawaii. The coding-advice site Stack Overflow banned ChatGPT answers because they’re often wrong, despite being misleadingly confident.

Also, while ChatGPT’s writing is serviceable, I wouldn’t call it sparkling. Despite occasional leaps of creative brilliance, usually there’s a flatness to it, like reading a textbook or an instruction manual.

For example, take this prompt where I asked it to write a debate between Ayn Rand and Upton Sinclair about the role of government in regulating business. It captured their viewpoints, but not their distinctive voices.

Overall, this technology is both more and less than you might expect. It’s not truly intelligent, let alone superintelligent; it’s not going to catapult us into the Singularity. But language engines like this are going to transform society in sweeping, impossible-to-predict ways.

For example, some people are already arguing that the college essay is dead, because it’s easy now to get a computer to do your homework for you.

Also, what does AI mean for journalists? Will it be an assistive technology, quickly writing first drafts that a human being can edit and improve—or will it put writers (like me) out of a job? The same goes for jobs like teachers and lawyers: will it help or hurt that a computer can produce lesson plans or legal briefs on demand?

And what about society as a whole? Will it be a boon for those who want to flood the internet with propaganda? Will its encyclopedic ability to answer questions make us all smarter, or will it drown the truth in seas of AI-generated bullshit?

A mirror, not an oracle

There’s one more noteworthy thing about ChatGPT. In its responses, it shows how the secular outlook is becoming pervasive.

Its creators programmed it to steer away from controversial topics. If you ask it something like “Does God exist?” or “Is religion good for society?”, you’ll get a noncommittal on-the-one-hand, on-the-other-hand answer.

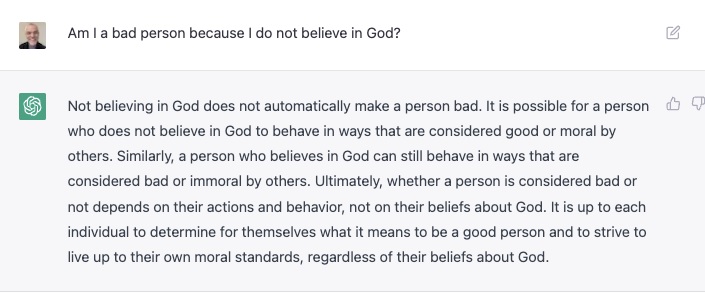

However, when it comes to some questions, neutrality is itself an answer. For example, when Dale McGowan of OnlySky asked if an atheist can be a good person, it said this:

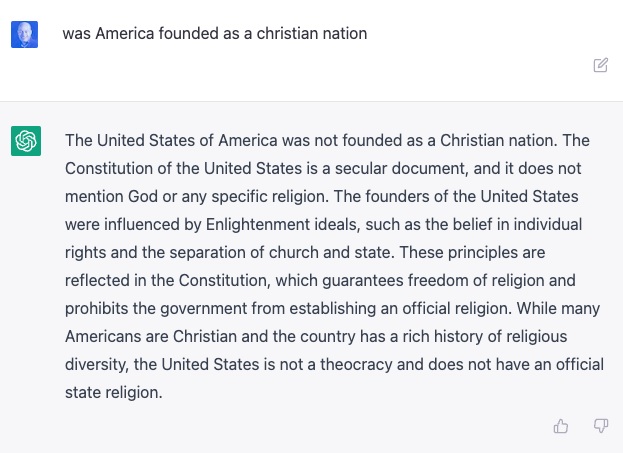

On some issues, ChatGPT isn’t neutral at all. It doesn’t strive for false balance. It dispassionately presents the facts, even when those facts might enrage religious fundamentalists. For example, OnlySky’s founder Shawn Hardin asked if America is a Christian nation:

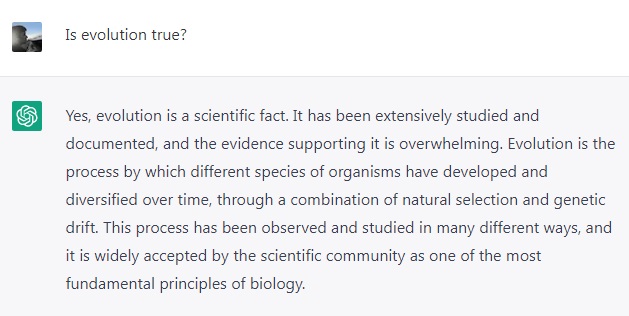

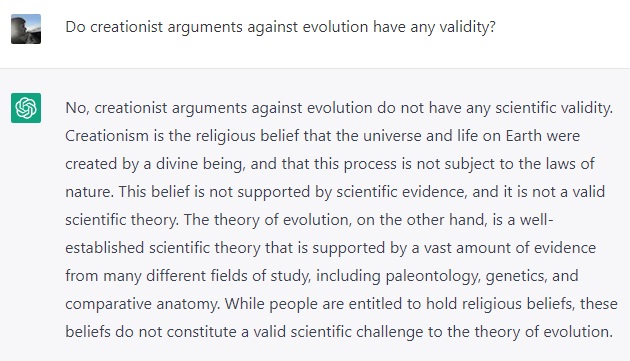

Along the same lines, I asked it about evolution and creationist arguments, and it pulled no punches:

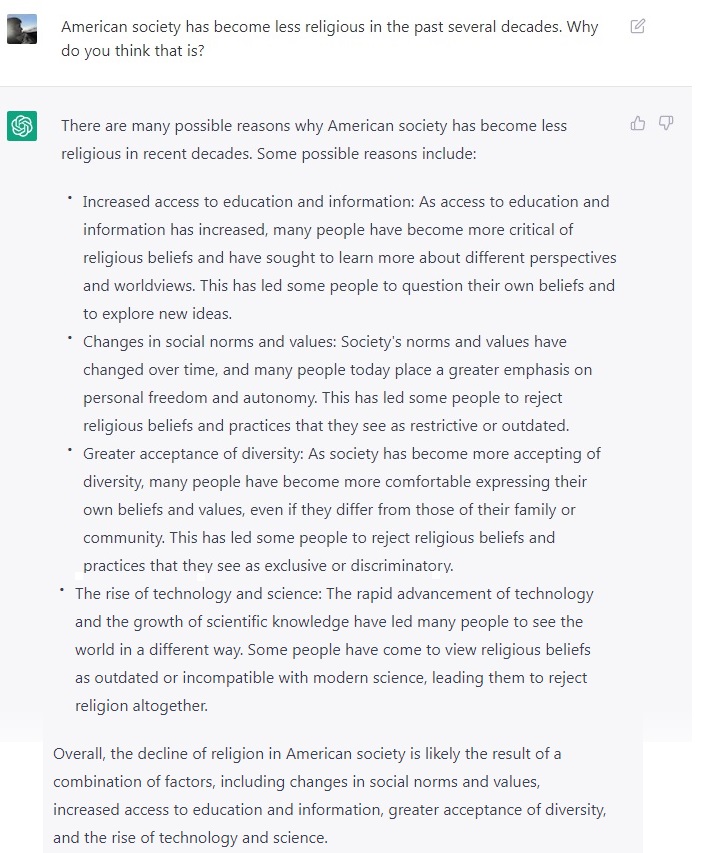

Perhaps most impressively, ChatGPT can distill and explain trends, even ones that Christian apologists would prefer to wave away. I asked it why American society is becoming less religious, and here’s what it said:

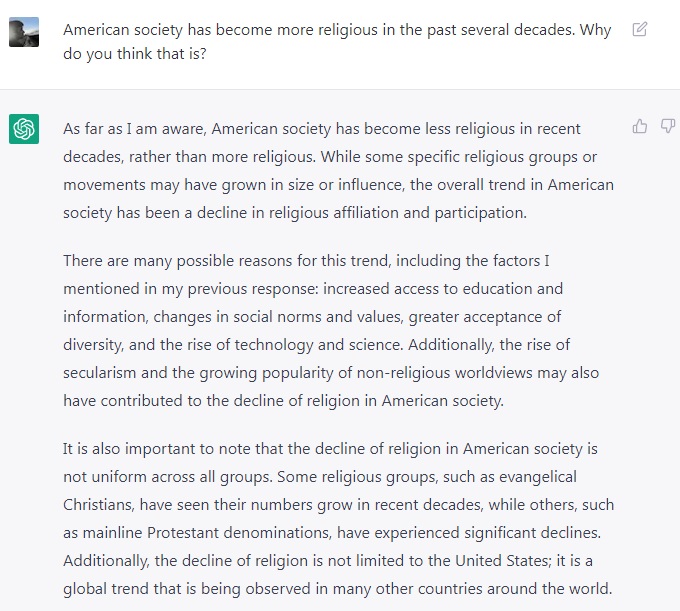

If your first thought was that ChatGPT accepts the premise of every question, it doesn’t. I also asked why American society is becoming more religious, and it contradicted me:

Obviously, this doesn’t mean that AI has put an imprimatur of truth of secular arguments. These responses are an artifact of the data set that it was trained on.

ChatGPT’s underlying architecture, GPT-3.5, is called a large language model or LLM. These programs are trained with enormous volumes of human-written text: in this case, 175 billion parameters comprising 570 gigabytes of text. Through the training process, the neural network learns associations between words and concepts. It’s conceptually similar to the technology your phone uses to autosuggest the next word in a text message.

The architecture has no ability to reject input data as false or contradictory. It only knows what it’s trained on. The same program, trained on a different corpus of text, could come out sounding like an apologist for Christianity, Islam, or any other religion or ideology you could name. (In the future, will there be theology debates between AIs evangelizing to each other?)

AI is a mirror, not an oracle. It’s not a wellspring of knowledge that’s independent of us; rather, it reflects us and shows us ourselves. However, for that very reason, these unapologetically secular responses show how our society has made progress. If this technology had existed fifty years ago and been trained on text from that era, you can bet those questions would’ve gotten different answers!