[Previous: The future: sci-fi utopia or heat-death disaster?]

What do we owe the future?

It’s a question that’s become increasingly urgent in our era. It’s at the heart of environmental justice lawsuits brought by children, arguing that states and countries have a moral duty to do more to stop climate change.

There are also influential technologists and philosophers in Silicon Valley who are preoccupied with this issue. Many of these people, who are also affiliated with the effective altruism movement, belong to a school of thought that goes by the name of longtermism.

For example, William MacAskill (mentor of Sam Bankman-Fried of FTX infamy) wrote in the New York Times about our obligations to future humans:

Even if humanity lasts only as long as the typical mammal species (about one million years), and even if the world population falls to a tenth of its current size, 99.5 percent of your life would still be ahead of you. On the scale of a typical human life, you in the present would be just a few months old. The future is big.

“The Case for Longtermism.” The New York Times, 5 August 2022.

Another notable longtermist is the philosopher Nick Bostrom. He argues that our overriding priority should be reducing existential risk, or disasters which could wipe out our species:

Our intuitions and coping strategies have been shaped by our long experience with risks such as dangerous animals, hostile individuals or tribes, poisonous foods, automobile accidents, Chernobyl, Bhopal, volcano eruptions, earthquakes, droughts, World War I, World War II, epidemics of influenza, smallpox, black plague, and AIDS. These types of disasters have occurred many times and our cultural attitudes towards risk have been shaped by trial-and-error in managing such hazards. But tragic as such events are to the people immediately affected, in the big picture of things—from the perspective of humankind as a whole—even the worst of these catastrophes are mere ripples on the surface of the great sea of life. They haven’t significantly affected the total amount of human suffering or happiness or determined the long-term fate of our species.

“Existential Risks: Analyzing Human Extinction Scenarios and Related Hazards.” Journal of Evolution and Technology, Vol. 9, No. 1.

To be clear, long-term thinking is absolutely a good thing and very much needed. Most of our problems, including all the most serious ones, exist because humans are prone to neglecting the future. Too many people are resistant to thinking about the consequences of their actions beyond the next few years—sometimes, beyond the next quarterly income statement. Longtermism ought to be a set of corrective lenses for this moral short-sightedness.

However, many longtermists make the opposite error. They take an imaginative leap ludicrously far into the future, arguing that the highest priority is making the decisions that will be best for our descendants across “millions, billions, and trillions of years” (as longtermist Nicholas Beckstead puts it). This is well beyond where evidence can guide us.

What we can know about the future

We can and should think about the future, but we have to make decisions on the basis of what we can reasonably predict. We can make well-supported guesses about what the world might be like in fifty or a hundred years, and what the people alive at that time might want or need.

But the further into the future we go, the hazier the outlook becomes. The branches of contingency multiply. The chaos-butterfly influence of random chance looms larger. As this happens, we should put less and less confidence in our predictions.

Eventually, we reach a point where we can no longer hope to guess what the future might be like or what it could need from us. Our future vision stops at a barrier of time we can’t see beyond.

Where that barrier is, exactly, is a matter for debate. A hundred years, two hundred: perhaps. But can we pretend to have even the slightest idea of what the world will be like in a thousand years, or ten thousand? I’d say not.

Try this thought experiment. Imagine the world as it was a thousand years in the past, in the year 1022 CE.

In Europe, the land was a Dark Age patchwork of warring kingdoms and empires. The Roman Catholic and Orthodox churches were the Christian powers; Protestantism lay far in the future. Islam was in its golden age, and the Caliphate of Córdoba ruled most of Spain. Most people were illiterate, and books were expensive treasures that had to be copied by hand. Combat was by sword, spear and arrow; there were no firearms, not even the earliest cannons.

Would the people of that era have been able to conceive of what the world would be like today? Could they have guessed what problems we’d be facing, or what our technology could and couldn’t do? Would their speculations about what the future might need from them be of any value to us?

If we don’t trust the people of 1022 to guide our lives, we should refrain from dictating policy for the good of people in 3022. We should preserve our epistemic humility, and not focus on a single scenario as if it were the only possible one.

Longtermism as Pascal’s Wager

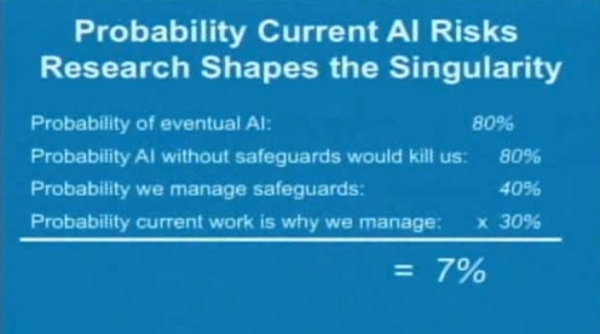

That’s why I’m skeptical of longtermists who claim, with complete confidence, that the future of humanity consists of mind uploading, simulated worlds, superintelligent AI, and space colonization. I’m equally skeptical of those who “merely” put absurdly-precise probabilities on these outcomes:

Any or all of these things might happen. I’m not denying that. What I am denying is that this is the only future that can or should exist.

Especially, I deny that our sole moral obligation is to bring it into being. The dark side of longtermist thinking is the view that no evil that’s happening to living people today really matters. War, tyranny, poverty, pandemics, even climate change are all irrelevant, just so long as humanity as a whole survives.

Extreme longtermists believe the distant future will be a hyper-advanced utopian civilization populated by astronomical numbers of conscious beings, whether they’re flesh-and-blood or digital minds living in computers. (Bostrom has calculated a figure of 1058 people.)

These numbers are to our current population as the ocean is to a drop of water, or a grain of sand is to all the planet’s beaches. If you balance them against each other—assuming, of course, that you give merely hypothetical humans the same moral weight as actual, living people—then our world dwindles into insignificance.

Even if billions of human beings were suffering and dying before their eyes right now, these longtermist thinkers would see this as of no concern. More chilling, they might find it an acceptable price to pay, if that suffering and death led to a tiny increase in the probability of their sci-fi utopia.

Sometimes, they come close to endorsing this logic themselves. In the paper cited earlier, Nicholas Beckstead writes that “saving a life in a rich country is substantially more important than saving a life in a poor country, other things being equal”—because people in rich countries are more likely to participate in research that could bring this future about.

This, once again, is Pascal’s Wager thinking. It has the same structure as religious apologists who say that our one and only concern should be saving souls, because no earthly suffering matters compared to an eternity of bliss in heaven. It implies that the actual world, and the actual people who inhabit it right now, are worthless compared to an unevidenced future state.

This grotesque reasoning subordinates all real ethical concerns to imaginary ones. Infinitely valuable outcomes create repugnant conclusions. If a longtermist calculated even a millionth of a percent chance that staging a coup, overthrowing a nation and enslaving its populace to work on AI research would bring on the glorious future a little sooner, why shouldn’t they do it? There’s no horror that this can’t justify.

Why we can’t “shut up and multiply”

The flaw in Pascal’s Wager is that it doesn’t work when there are multiple options for which god you should believe in. These longtermists’ logic has the same hole.

They assume that there’s only one possible future we should consider, and that their plan of action is what will take us there. The probabilities become undefined if either of these assumptions is untrue. The “shut up and multiply” approach doesn’t work when you have countless incompatible outcomes to choose from.

Again, we should try to predict the consequences our actions may have on the future, and we should use those predictions to inform our choices in the here and now. What I object to is the unwarranted certainty that these longtermists place in their guesses.

As a rationalist, I believe we should always bear in mind the limits of our own mental powers. Whenever possible, we should let the evidence guide us.

When evidence can’t guide us—such as when we speculate about things that haven’t yet happened—we should be more humble, and our conclusions more tentative. When it makes dogmatic claims to know what our future holds, longtermism goes against that skeptical principle. It strays into the realm of religious faith.